1. 引言

随着网络的迅猛发展,网络结构越来越复杂,网络出现拥塞的可能性也越来越大[1] ,如果能对网络流量提前预测,对流量进行疏通,就可以有效避免因拥塞而产生的时延等问题,因此建立一个有效的网络流量预测模型,成为了国内外研究的热点。传统的网络流量预测模型只处理平稳过程和非平稳过程,但真实的网络环境,呈现出明显的多尺度性和自相似性,导致预测误差较大,如Matkov模型、Poisson模型、AR模型等。文献[2] 使用BP神经网络进行流量预测,达到了良好的拟合效果,也有较高的预测精度,但BP神经网络不能很好的处理多尺度特性的数据[2] 。Kohonen神经网络是一种自组织网络,采用无监督学习算法进行网络训练,在样本分类、排序和样本检测方面有着广泛应用,基于Kohonen神经网络的流量预测模型,不仅可以保证预测的精度,同时可以提高网络的认知特性[3] 。鉴于此,本文提出一种新型的基于Kohonen网络流量的组合式预测模型,对网络流量进行预测。

2. 网络流量的小波分解

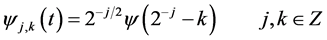

小波变换 是在一组小波基上的投影,分别是时间平移后的近似信号和尺度(频率)上缩放后的细节信号。小波基包括一个由原型小波通过规范平移和伸缩得到的函数族

是在一组小波基上的投影,分别是时间平移后的近似信号和尺度(频率)上缩放后的细节信号。小波基包括一个由原型小波通过规范平移和伸缩得到的函数族 和一个尺度函数族

和一个尺度函数族 ,这两个函数集是[4] :

,这两个函数集是[4] :

(1)

(1)

(2)

(2)

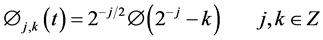

表示尺度函数基

表示尺度函数基 生成的子空间,

生成的子空间, 表示小波函数基

表示小波函数基 生成的子空间,依次正交分解

生成的子空间,依次正交分解 ,可得到式3:

,可得到式3:

(3)

(3)

其中n为分解层数。从而可以得到近似信号和细节信号:

(4)

(4)

(5)

(5)

式中, 为第j层的细节信号;系数

为第j层的细节信号;系数 表示通过信号

表示通过信号 和

和 的内积。因此,网络流量信号可以通过其逼近信号以及相应的细节信号无损地表示出来,即

的内积。因此,网络流量信号可以通过其逼近信号以及相应的细节信号无损地表示出来,即

(6)

(6)

3. 改进的流量预测模型

3.1. 基于Kohonen神经网络的组合式预测模型建立

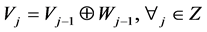

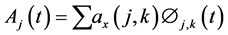

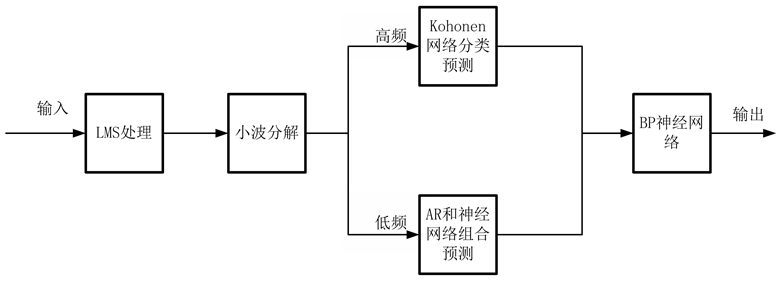

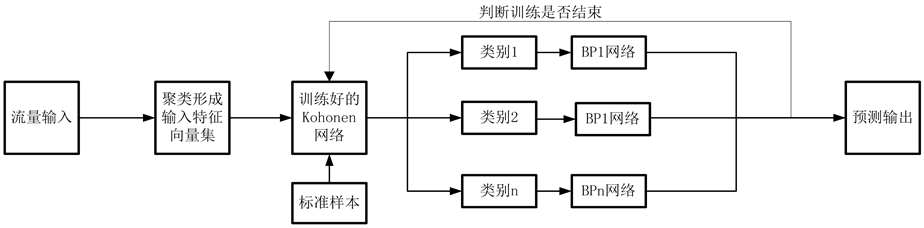

传统小波神经网络预测模型,有着一些缺陷,存在过拟合现象。过拟合现象表示,当训练能力到达一个极限时,继续提高训练能力,反而使预测精度下降[5] 。因此,提出了基于Kohonen神经网络并辅以小波BP神经网络的预测模型,新模型引入最小二乘法对输入样本进行处理,然后将处理过的样本进行小波分解,并将得到的高频序列和低频序列,利用Kohonen网络和自回归AR网络进行分开预测,最后用BP网络进行拟合,得到最后的流量预测。新流量预测模型如图1所示。

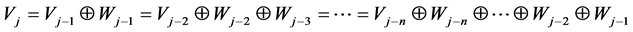

3.2. Kohonen神经网络分类预测模型

Kohonen神经网络是一种自组织,无监督的自适应映射模型。这种映射是对输入模式x在输出层中找出所对应的类别,即最优匹配的神经元C。Kohonen分类预测模型先将标准样本进行分类,并对分类的结果分别进行BP网络预测,预测模型如图2所示。

该模型将Kohonen神经网络,BP神经网络有机结合起来,先通过已有的流量数据训练Kohonen网络,并进行有效的分类,再对前一天的流量输入找到所属的类别,并用相应的BP网络预测后一天的流量。

3.3. Kohonen分类训练算法

Kohonen训练数据是来自事先测量的流量数据,在本文中是历时15天每隔1小时的网络流量。

Figure 1. Network prediction model

图1. 网络预测模型

Figure 2. The classification and prediction of Kohonen neural network

图2. Kohonen神经网络分类及预测

算法步骤如下:

1) 确定Kohonen神经网络结构;

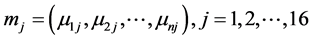

2) 随机选取权重矢量mj初值为较小值,其中 ;

;

3) 对于输入特征向量集 ,选取其中之一作为Kohonen神经网络的输入;

,选取其中之一作为Kohonen神经网络的输入;

4) 计算输入向量 与权重矢量

与权重矢量 的匹配程度,并用欧氏距离表示

的匹配程度,并用欧氏距离表示

(7)

(7)

就是该输入向量的获胜神经元;

就是该输入向量的获胜神经元;

5) 根据下式修正获胜神经元c所连接的权向量与其几何邻域 内的神经元

内的神经元

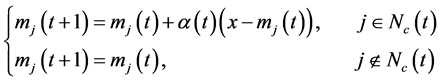

(8)

(8)

其中, 表示可变学习速度;

表示可变学习速度;

6) 反复进行式3~式6的步骤,直到输出层获胜神经元c保持稳定为止;

3.4. Kohonen分类预测模型算法

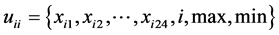

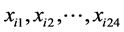

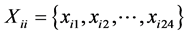

1) 对输入信号进行聚类形成输入特征向量集,聚类分析每一样本为 ,以数据包为单位构造特征向量。其中

,以数据包为单位构造特征向量。其中 为第i层细节信号第i天的样本集,

为第i层细节信号第i天的样本集, 为第i天的

为第i天的 小时的流量数据,i表示第i层细节信号,max表示这一天流量最大值,min表示这一天流量最小值;

小时的流量数据,i表示第i层细节信号,max表示这一天流量最大值,min表示这一天流量最小值;

2) 对特征向量归一化,公式如下:

(9)

(9)

3) 将特征向量输入到Kohonen神经网络进行分类;

4) 根据分类的结果,进行相应的BP神经网络的预测。BP神经网络的训练数据来自标准样本事先分类的网络流量。若聚类后某类别还有n个相似的天,用这n个相似的样本信号 来预测第i层第i + 1天

来预测第i层第i + 1天 的数据,样本构成如下:

的数据,样本构成如下:

样本1:输入 输出

输出

样本2:输入 输出

输出

…

样本24:输入 输出

输出

训练样本不要选择太多,过多会导致神经网络过渡学习,影响网络的预测效果;

5) 判定是否达到预测天数,若未达到,则继续预测,若达到,则预测结束,输出预测结果;

4. 仿真结果与分析

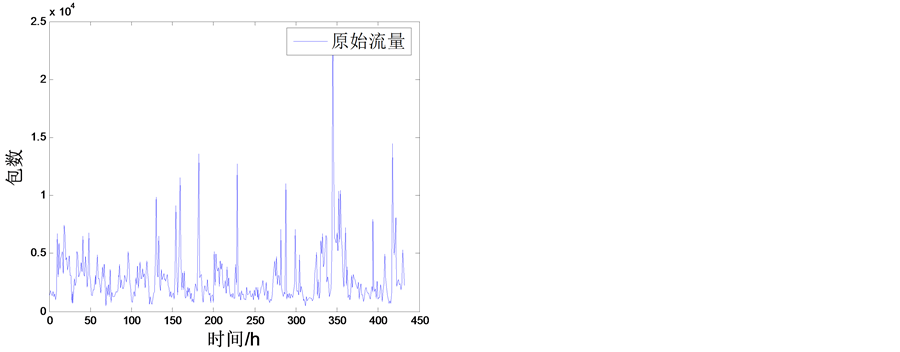

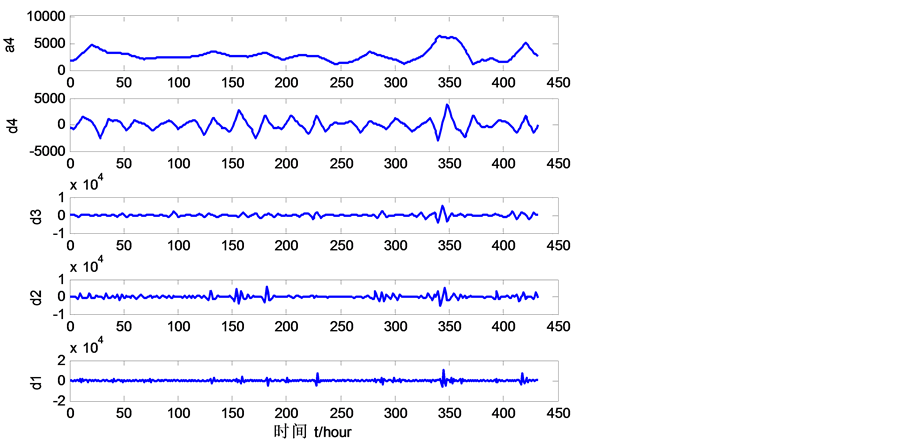

本文主要想通过前11天的流量数据,来预测后4天的流量,利用Matlab工具进行仿真分析,网络流量数据是来自杭州电子科技大学的某实验室路由器历时15天每隔1小时的历史数据,如图3所示。

首先利用小波分解,由于4层分解已经能满足本实验的要求,故将原始流量X分解成[6] : ,如图4所示。

,如图4所示。

Figure 3. The original data

图3. 原始数据

Figure 4. Four layer wavelet decomposition signal figures

图4. 小波分解4层细节信号图

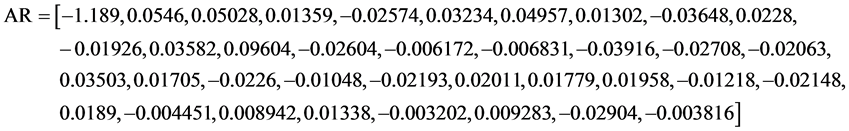

其次,对近似信号A4进行 AR模型和BP神经网络组合模型预测。第一步,先确定AR模型阶数。本文采用AIC、BIC准则对AR模型进行估计,得出AR模型最佳预测阶数为37阶[7] 。AR模型参数如下:

第二步,确定BP网络是1 × 25 × 1三层结构,隐藏层采用tansig算法,输出层采用purelin算法,训练函数是trainlm算法,且目标误差是0.001。

然后,将小波分解的细节信号进行Kohonen分类,利用Matlab神经网络工具箱,对4层细节信号进行分类,由于实验样本量不大,仅有15天流量数据,既60组样本,因此设计网络的竞争层为4 × 4的结构即可。将第1层细节信号的编类为1,2,…,15以此类推,第4层细节信号的编类为46,47,…,60,由网络的输出结果可以看到60组数据分成了8组,如表1所示。

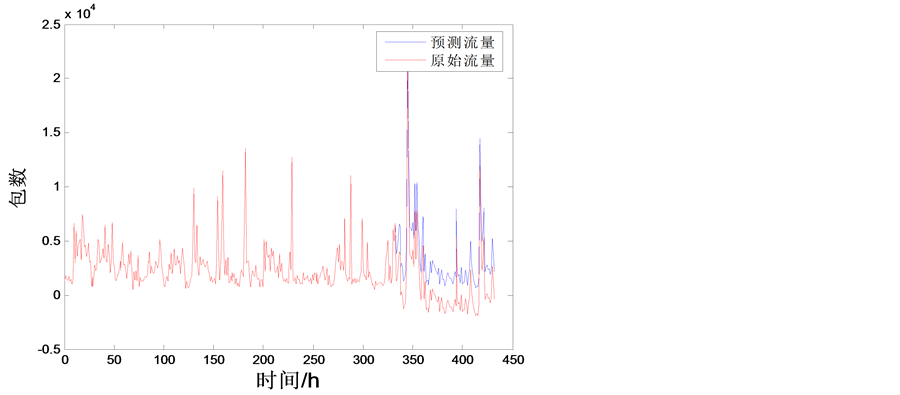

最后得到的组合式模型预测后与实际流量输入曲线,如图5所示。

从图5所示的预测结果可以看出,基于Kohonen神经网络的预测模型对后4天的流量有较好的预测。为了衡量该模型与传统的预测方法之间的优劣,采用相对均方差(RMSE)作为评判标准:

,

,

值越小则越准确。从表2的结果可知,基于Kohonen神经网络的预测模型比传统预测模型有更高的预测精度。

5. 结束语

本文将Kohonen神经网络用于网络流量预测,提出了基于Kohonen神经网络的组合式流量预测模型。

Table 1. Sample clustering results

表1. 样本聚类结果

Figure 5. Traffic prediction by combined model of network based on Kohonen neural network

图5. 基于kohonen神经网络的组合式流量预测

Table 2. Compared with traditional model by RMSE

表2. 与传统预测模型RMSE对比

该模型综合运用神经网络,小波分解,AR线性预测等理论方法进行建模,根据前11天历史流量进行后4天的流量预测,结果表明,这种组合预测模型可以提高对非线性、多时间尺度变化的网络流量的预测精度。